AI makes cheating easy

How will ChatGPT-3 affect learning at BHS?

This image was generated by the AI art generator “Night Cafe” by simply inserting the prompt “student cheating on an essay”.

March 15, 2023

Writing an essay takes reading, note-taking, brainstorming, rough drafts, revision, and editing. It’s one of the hardest things to do in high school and it can take days if not weeks to complete.

ChatGPT-3 can write a high school essay in a matter of seconds.

The Register has talked with several students who admitted – off the record– to using the software to cheat on schoolwork.

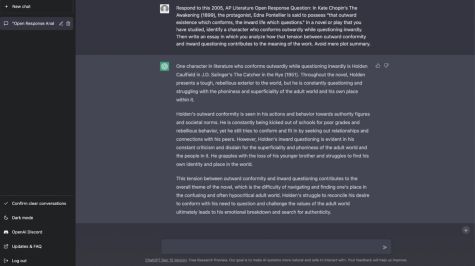

“I used it because I couldn’t figure out how to phrase something,” one student said. “[Chat GPT-3] helped me rephrase it and get more information on that subject. I got a one-hundred percent on my assignment.”

ChatGPT-3 is a free-to-use application that generates text based on user prompts. Its responses tend to be grammatically and logically flawless. Users can customize the length, tone, style, and nearly any other characteristic of the output. This is made possible through its titular, machine learning model, programmed to produce probabilistic responses from a massive dataset of publicly available text and fine-tuned by human “AI trainers.”

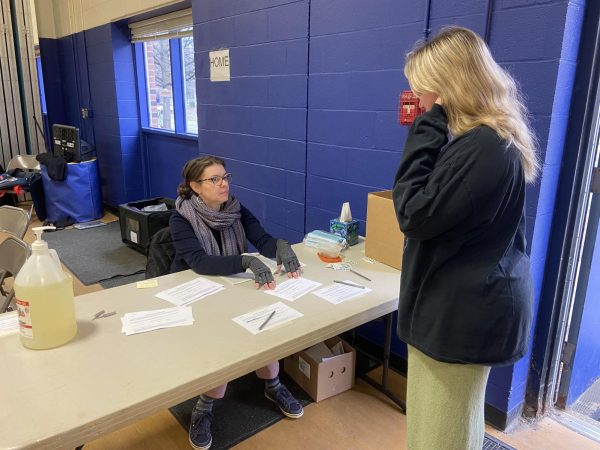

“It’s amazing how specific it can be. [It gives you] the opportunity to regenerate a response, so it will tweak and change the wording as it’s being written,” instructional coach Joe Faitak said, “I think it defies plagiarism because you’re technically not copying something someone else has written; to my knowledge, it is a new piece of text.”

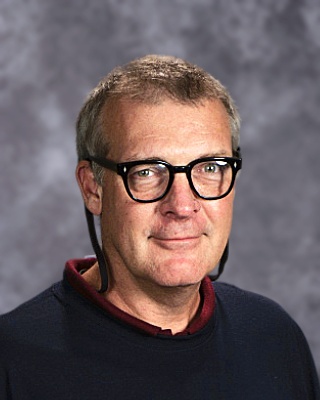

Computer science teacher Larry Cullum was less convinced of the chatbot’s ability to accommodate cheating.

“It’s impressive in terms of ability to generate text, it’d probably be pretty good with creative writing, but I’m not sure it’d be very good with analytical or very domain-specific knowledge,” Cullum said. “[Schoolwork completed using ChatGPT-3] would likely be pretty easy to recognize because…you tend to get the same type of response over and over. If everyone gave the same answer on a writing assignment, you can pretty much guess that they’re cheating.”

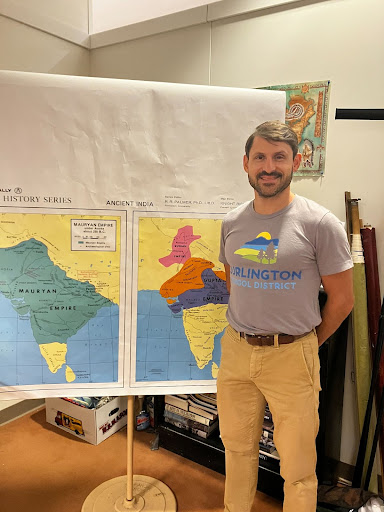

While the technology excels at producing argumentative paragraphs and essays, language arts teacher Peter McConville is not worried.

“The fact of the matter is, it’s really hard to replicate tone; I’m still going to be familiar with student writing through things like short assignments in class, and typically I have a pretty decent understanding of what people’s syntax looks like,” McConville said. “So, when something comes across our screen that sort of screams ‘this particular student didn’t write this’, it’s not in their voice, it’s not in the vocabulary that they typically use – it’s not going to be hard to detect.”

Despite this, teachers may still want to prepare against chatbot-aided cheating. The technology is free to use and extremely accessible, having already accrued millions of users in the past two months. English teacher Hayden Chichester has already dealt with an incident of a student using ChatGPT on their final project.

“The writing looked fishy,” Chichester said. “We were confused about why the writing style had changed so drastically and how it didn’t really fit the prompt. We compared the writing to past work and it was completely different.”

There may be a technical solution. Just recently, college student Edward Tian released an app that can help identify works written by ChatGPT-3 by measuring levels of “randomness” throughout a text. Additionally, OpenAI is developing a “watermark” for ChatGPT-3, in which works produced by the chatbot will follow a specific, nearly undetectable pattern that can be decoded with a separate program and used to confirm suspicions of inauthenticity.

However, the technology will only continue to be refined. An improved version of the chatbot, ChatGPT-4, is being released in the near future and is expected to draw from a data set that is a hundredfold the size of the original. Educators should be prepared for a paradigm shift in the classroom. Some teachers are planning on doing more writing during class time, Faitak talked about the possibility of the district blocking access to ChatGPT and Cullum proposed making assignments more current and specific to the students.

“I think that technology forces people all the time to reevaluate what and how they teach,” McConville said. “We need to make sure that the things we teach continue to be relevant and continue to be learned.”

Faitak had similar thoughts.

“I think it forces educators to rethink how we do school.”

Faitak believes that technology such as ChatGPT could change what skills are valuable to learn.

“In the early days of telephones there used to be telephone operators. They had to sit, receive calls, and put plugs in different places. That was a skill that had to be taught. That’s gone. No one learns that anymore because the technology has improved,” Faitak said. “To me, [ChatGPT-3] is similar, it’s really going to force us to think differently about what we’re expecting.”

There are also concerns about how ChatGPT will affect the way we value critical thinking.

“What makes me nervous is the message that might be received around this technology,” said McConville. “That because [it] exists, that it is capable of writing coherent thoughts and crafting arguments, that students will no longer feel the need to learn how to do so themselves.”

McConville thinks we should be worried about what it will look like if we fall into a state of complacency, in which we allow “the way we craft language” to be dictated by machines.

“I’m worried that the biases that are being potentially spit out by those computers aren’t necessarily being picked up on by people because there is just this faith in the technology,” McConville said. “We’ve already handed over our attention to technology companies, what I’m really afraid of is that we hand over our thinking to them as well.”